The IBM Planning Analytics (TM1) ODBCOutput function is used in TurboIntegrator (TI) script to execute a SQL Update or Insert query against an open ODBC data source.

ODBCOutput is very useful but can be slow if exporting large numbers of records, especially in a cloud environment. A typical TI process that uses ODBCOutput will have a TM1 cube view as a data source where the ODBC connection is established (using ODBCOpen) on the Prolog tab, and then ODBCOutput is called on the Data tab, so that it executes once for every value in the data source.

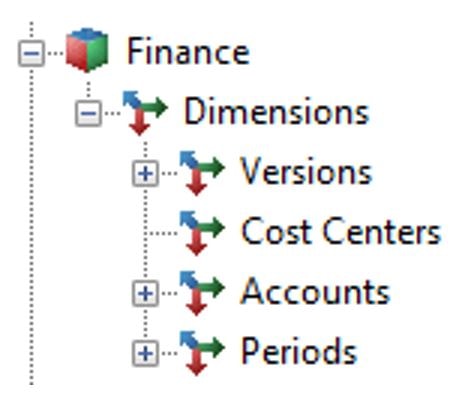

Let’s use this Finance cube with 4 dimensions as an example. Suppose we have created a data source cube view on this cube to upload Budget values to a table called BudgetAccountBalances in the relational data warehouse.

The TI process will loop through all the Budget values in this Finance cube, and build a SQL statement for each value that looks something like this:

| INSERT INTO BudgetAccountBalances ([RunTime],[Version],[Period],[CostCenter],[Account],[Value])

VALUES ( vRunTime, dVersion, dPeriod, dCostCenter, dAccount, dValue ); |

The TI script to generate the SQL statement, and execute it using the ODBCoutput function looks something like this:

ODBCOUTPUT(<ODBC Source>, ‘ ‘INSERT INTO BudgetAccountBalances ([RunTime],[Version],[Period],[CostCenter],[Account],[Value])’,

‘VALUES (‘

| CHAR(39) | vRunTime | CHAR(39) | ‘, ‘

| CHAR(39) | dVersion | CHAR(39) | ‘, ‘

| CHAR(39) | dPeriod | CHAR(39) | ‘ , ‘

| CHAR(39) | dCostCenter | CHAR(39) | ‘ , ‘

| CHAR(39) | dAccount | CHAR(39) | ‘ , ‘

| CHAR(39) | NUMBERTOSTRING(dValue) | CHAR(39) | ‘ );’);

In this statement, vRuntime is defined in the Prolog where it grabs the current day and time, and the variables that begin with “d” all come from the data source, and are named on the TI process Variables tab.

For each record, the TI process executes this statement. In a cloud environment or a slow network, this can take a long time because the process must create this statement, communicate across networks (from wherever the TM1 model is hosted to wherever the target data warehouse is located), and then execute this command in the data warehouse. In one instance we have seen a process with around 200,000 records take 3+ hours!

To work around this situation, what you can do is create a longer SQL INSERT statement that appends multiple records from the data source at one time. With a longer INSERT statement, the ODBCOutput command is executed fewer times and there is less communication between networks even though the same number of records get written to the target. The result is a performance improvement! Furthermore, sending “batches” of records to the target data warehouse at once enhances performance there too!.

Suppose we want to create a batch for every 5 records (the batch size). Then the form of the batched SQL statement will look something like this:

| INSERT INTO BudgetAccountBalances ([RunTime],[Version],[Period],[CostCenter],[Account],[Value])

VALUES ( vRunTime1, dVersion1, dPeriod1, dCostCenter1, dAccount1, dValue1 ) , ( vRunTime2, dVersion2, dPeriod2, dCostCenter2, dAccount2, dValue2 ) , ( vRunTime3, dVersion3, dPeriod3, dCostCenter3, dAccount3, dValue3 ) , ( vRunTime4, dVersion4, dPeriod4, dCostCenter4, dAccount4, dValue4 ) , ( vRunTime5, dVersion5, dPeriod5, dCostCenter5, dAccount5, dValue5 ) ; |

Below is what the code in the Data tab would look like by taking this approach. Notice that there is a counter (vRecord) that is incremented for each data source value, as the SQL statement is extended. ODBCoutput is only called when the counter reaches the batch size (vExportForEveryxRecords). After the batch is sent, the counter resets to 1, and construction of the next batch of records commences.

IF( vSQLQuery @= ”);

vSQLQuery = ‘INSERT INTO BudgetAccountBalances ([RunTime],[Version],[Period],[CostCenter],[Account],[Value]) VALUES’;

ENDIF;

vRecordToAdd = ‘(‘

| CHAR(39) | vRunTime | CHAR(39) | ‘, ‘

| CHAR(39) | dVersion | CHAR(39) | ‘, ‘

| CHAR(39) | dPeriod | CHAR(39) | ‘ , ‘

| CHAR(39) | dCostCenter | CHAR(39) | ‘ , ‘

| CHAR(39) | dAccount | CHAR(39) | ‘ , ‘

| CHAR(39) | NUMBERTOSTRING(dValue) | CHAR(39) | ‘ )’;

vSQLQuery = vSQLQuery | IF( vRecord > 1 , ‘,’ , ”) | vRecordToAdd ;

IF( vRecord = vExportForEveryxRecords );

ODBCOutput( ODBCSource, vSQLQuery);

vSQLQuery = ”;

vRecord = 1;

ELSE;

vRecord = vRecord + 1;

ENDIF;

In this example vSQLQuery is defined in the Prolog as a blank string statement and then is redefined on multiple times on the data tab. vRecordtoAdd is defined in the Prolog as a blank string statement and then is redefined on the data tab. vRecord is defined in the Prolog as 0, and then is redefined multiple times on the data tab. vExportForEveryxRecords is defined on the Prolog as however many records should be included in a “batch”. If going with the “batch” approach, it is required to have this code in the epilog that picks up the last remaining records that are smaller than the full “batch” amount:

IF( vSQLQuery @<> ”);

ODBCOutput(ODBCSource, vSQLQuery);

ENDIF;

ODBCClose(ODBCSource);

Although batching adds complexity to the TI script code, it is worth it! Returning to the earlier example where 200,000 records took 3+ hours to process without batching, processing time improved dramatically to 7 minutes with batching, using batches of 500!