When working with IBM Planning Analytics, it is not uncommon to source data from a relational data source.

Typically, you will see a Data Warehouse or Data Lake where data is transformed and loaded on a schedule using an ETL tool. ETL, which stands for Extract, Transform and Load, extracts data from a source, modifies it in some way (to meet a specific requirement), and then places it into another location where the transformed data can more easily be sourced by a consumer or downstream application.

One example of an ETL tool is SQL Server Integration Services or “SSIS”. SSIS is part of Microsoft’s Business Intelligence Suite and is widely used to achieve data integration. This platform is designed to solve issues related to data integration and workflow applications and is a robust option for scheduled data loads as well as performing routine database maintenance.

You use SQL Server Integration Services to deploy ETL “packages”. These SSIS packages can automate various tasks such as:

- Archiving data

- Loading data

- Transferring data (from one data source to another)

- Data cleansing or transformation

- Other importance (yet mundane) DBA tasks like purging old files or indexing a database.

Usually, you deploy a SSIS package using a SQL Agent job and configure it to run as per a defined schedule.

Error Handling in SSIS Packages

As part of a best practice SSIS design, you need to (among other things) make sure all SSIS packages properly traps for errors and, if there is a problem, can easily be restarted, preferably without having to rerun the entire package from the beginning. These design principals will make the life of the DBA or those responsible for the results of the package, easier by allowing them to more quickly troubleshoot and resolve issues should a package fail.

Tell Me About It

SSIS packages are made up of “tasks”. The Send Mail task can be configured to automatically send an e-mail message if tasks in a package succeed or fail, or send messages in response to an error that the package may raise during its execution. For example, a package can notify a database administrator about the success or failure of a data load so that it can (hopefully) be resolved quickly and downstream applications (who consume that data) can have the “freshest” version of the data, on schedule.

How fresh is the data in Planning Analytics?

Let’s suppose that there is an SSIS package running on a regular interval that performs extraction and transformation on SQL Server data and then loads the transformed data into a relational table that a Planning Analytics process queries (by way of an ODBC Data Source). That process then loads the data into PA cubes for consumption by financial analysts. If the SSIS package fails, and the table that PA “sources” are not updated, the data being consumed by the financial analysts is out of date or “stale”.

If the SSIS package is configured to send an email alert upon failure, it then is the responsibility of those who received the email, to inform all of the financial analysts (and other consumers of the data) of what’s going on– not an optimal situation.

A Better Option

Rather than being dependent on the source system (or an individual) to alert the Planning Analytic users as to the freshness of data, why not program Planning Analytics to display how current the source data is?

Last Update Date

Some (most) database tables will include a “last update date” column, which stores the date and time when each row was last updated. In other words, every time a row is updated, the last update data column is updated to reflect the date and time of that update. In SQL Server, you can use a trigger to automatically perform this update (a trigger is a special type of stored procedure that executes when an event occurs in the database server). Using this column, you can determine when each row in a table were last changed.

Assuming that the Planning Analytics source tables includes a last update column, we could figure out when certain rows in a table were last updated, but that can be tricky, especially to determine if rows of data was deleted or if there are thousands (or more) rows of data.

A Better Approach

An alternative approach that I like is to make use of the SQL Server CHECKSUM function, which returns the binary checksum value computed over the rows of a table. A checksum is a “hash value” that can be used to determine equality between values. In other words, if you compare hash values from a table – computed at different times – you can determine if that table’s data has been updated or changed in anyway.

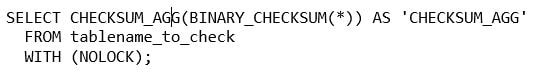

In the following query, I calculate and return the hash value for the table named “tablename_to_check”, which would return a number something like this: 940335729. If I wait 24 hours (for example), rerun the query and receive the same hash value, then I know that the data in that table has not changed in anyway (no additions, updates or deletions of any kind) and I can then either presume that the data is “stale” (the SSIS package failed to update the table) or (in the least) none of the data that Planning Analytics is consuming has changed since yesterday – which most likely is not probable.

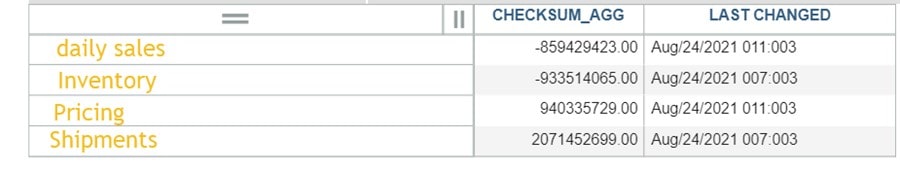

So at a high level, I created measures for each SQL table that Planning Analytics is using as a data source to store the latest hash value for each table as well as the “last updated” date and time that the hash value changed. My TurboIntegrator process runs the CHECKSUM query for the table, compares the returned value with what I’ve stored previously and if it is different, updates the hash value measure with the date and time.

Now my Planning Analytics users can access these values on their dashboards and know just when their source data was last refreshed (hint: you can hide the checksum values)!