| Title: | Loading Data to IBM Planning Analytics using TM1Py and Pandas Part 2 |

| Author(s): | Paul Caggiano |

| Application: | Planning Analytics, Python |

| Software Version: | Planning Analytics Version 11.8.01300.1; Python 3.9.12 |

| Keywords: | TM1Py, Python, Planning Analytics, TM1, Pandas |

This article is Part 2 of the series exploring how to integrate TM1Py with your IBM Planning Analytics (PA) instance to improve efficiency when loading data generated by a Python script. Part 1 of this series, covers how to create a connection between TM1Py and your PA instance. In this article, we will prepare our dataframe for an upload to PA.

Uploading Data from a Pandas Dataframe

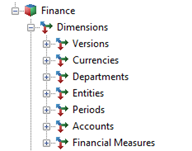

As mentioned, TM1Py comes with the ability to write directly to PA from a Pandas dataframe. The dataframe must have the same number of columns as the number of dimensions in the target cube, plus an additional column for the value, similar to an upload file. The columns must be in the same order as dimensions in the cube. Take the example below showing the cube dimension order, as well as the Pandas dataframe containing values output by an automated forecast.

Once the dataframe is prepared, an optional step is to convert numeric element names to “objects”. For example, in our dataframe the “Periods” column will default to type “int”, however TM1py will return an error if the element names are not of the type “object”. Additionally, it is a good practice to convert Values to type “int” as these are our numerical values. The code below will accomplish this.

This code utilizes a dictionary, the variable data_types_dict, to set the columns shown to the data types desired; “Periods” to a string/object and “Values” to an int. Then, we reassign the original dataframe, variable df, to the dataframe that references our new data types using the astype() function from Pandas. More information on functions for the Pandas library can be found here.

Now that the dataframe is set up for use with your cube in PA, we can start preparing the cube for our data, and send it to PA. This is covered in Part 3